Dall-E2 vs. Stable Diffusion: A Comparison of AI Image Generators

Written on

Introduction to AI Image Generators

In the realm of text-to-image AI tools, numerous options are readily available. However, two applications that particularly stand out are Dall-E2 and Stable Diffusion. Each has its distinct advantages and drawbacks, prompting the question: which one reigns supreme?

To answer this, I will analyze the image outputs from both AI models using identical text prompts. But first, let's clarify what each tool entails.

What is Dall-E2?

Dall-E2 is an AI system developed by OpenAI, unveiled on January 5, 2021. It generates images based on textual descriptions by leveraging a 12-billion parameter version of the GPT-3 transformer model, allowing it to comprehend natural language inputs and produce corresponding visuals.

What is Stable Diffusion?

Stable Diffusion is a text-to-image model that utilizes a frozen CLIP ViT-L/14 text encoder, conditioning the model on text prompts similar to Google's Imagen. This process involves a "diffusion" mechanism that starts with random noise and gradually refines the image, eliminating noise and aligning it more closely with the given text description.

Now, let's delve into a side-by-side comparison of their results.

Portraits

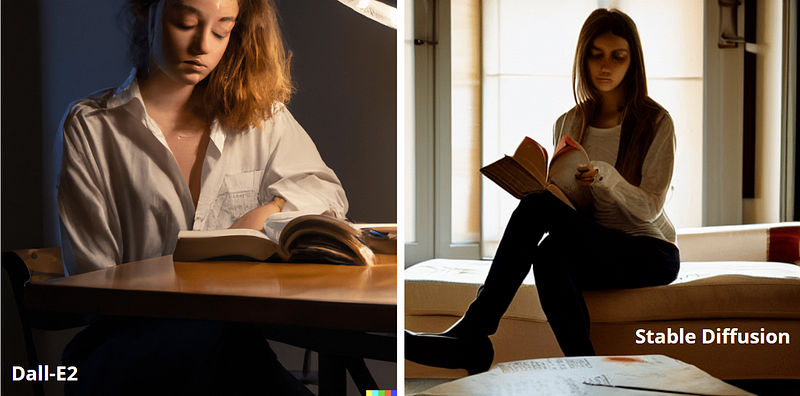

Prompt: Portrait of a girl in a coffeeshop, reading a book, dramatic lighting

Image by Jim Clyde Monge

The facial features in the left image appear more refined, with dramatic lighting enhancing the overall atmosphere. In contrast, Stable Diffusion seems to have misinterpreted the setting, depicting the girl sitting on a couch in a hotel rather than a coffeeshop.

Animals

Prompt: Portrait of a cute cat sitting by the window

Image by Jim Clyde Monge

In this instance, Dall-E2 emerges as the clear victor, successfully creating a more lifelike cat compared to Stable Diffusion.

Architecture

Prompt: A futuristic architecture building

Image by Jim Clyde Monge

I appreciate the architectural design produced by Stable Diffusion for this prompt; it exhibits remarkable detail and a futuristic flair. However, Dall-E2 also offers a contemporary yet simpler interpretation.

Landscapes

Prompt: A realistic photo of a beautiful landscape

Image by Jim Clyde Monge

Dall-E2 consistently generates realistic landscape images, while the output from Stable Diffusion leans toward a more artistic representation.

Painting

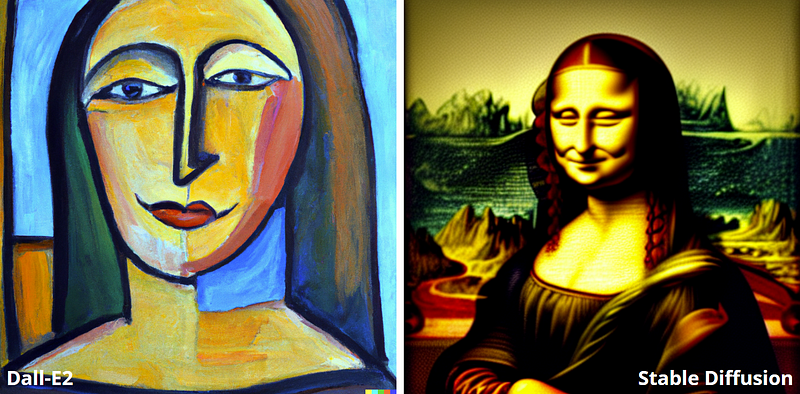

Prompt: A painting of Mona Lisa in Pablo Picasso style

Image by Jim Clyde Monge

The left image captures the essence of Picasso's style more effectively than the right, indicating a clear advantage for Dall-E2 in this category.

Robot

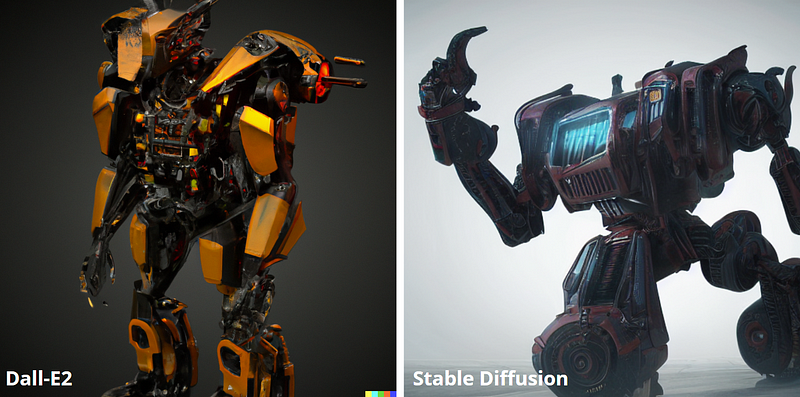

Prompt: Super cool transformer robot, highly detailed, smooth, octane render

Image by Jim Clyde Monge

Choosing a favorite between the two outputs is challenging, as both present equally impressive details and concepts.

Dragon

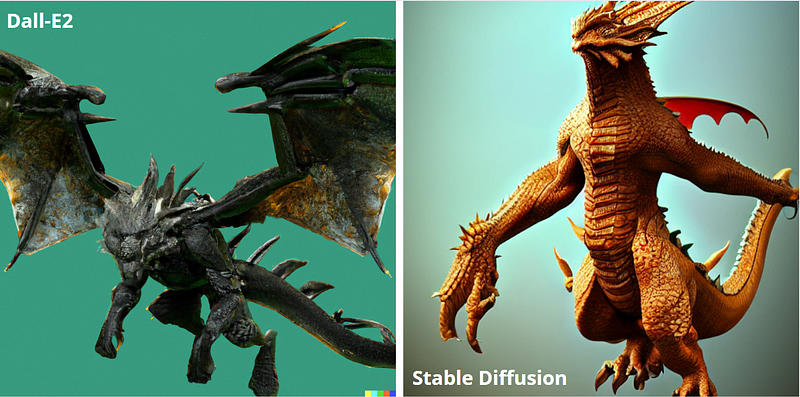

Prompt: Very cool full-body dragon in attack stance, highly detailed, octane render, trending on ArtStation

Image by Jim Clyde Monge

Both AI tools produce striking depictions of dragons, but my preference leans toward the left image.

Complex Prompts

Prompt: The effects of social media on women

Image by Jim Clyde Monge

For this prompt, I was curious to see how each AI would approach a complex theme. Interestingly, both opted for a single portrait of a girl rather than depicting a group of women.

Final Thoughts

So, which tool is superior? Based on the results presented, both Dall-E2 and Stable Diffusion exhibit their own strengths and weaknesses.

If high-resolution images are your priority, Stable Diffusion is the better option, generating images up to 1024x1024 pixels, while Dall-E2 is limited to 512x512. In terms of quality, Dall-E2 tends to outperform Stable Diffusion. However, Stable Diffusion is more flexible with its text prompts, allowing for the creation of images featuring well-known individuals like celebrities and politicians.

From a pricing perspective, Stable Diffusion (DreamStudio) is nearly ten times more affordable than Dall-E2. Both AI tools are still developing, and in the future, they are poised to transform how we create and consume visual content, significantly impacting various industries.

P.S. If you enjoy reading content like this on Medium, consider supporting me and many other writers by signing up for a membership.