Exploring GPT4All: Your Local Open-source Chatbot Solution

Written on

Introduction to Rapid AI Developments

The landscape of Large Language Models (LLMs) is evolving swiftly, with groundbreaking advancements like ChatGPT and the more recent GPT-4. To clarify, GPT stands for Generative Pre-trained Transformer, the foundational model, whereas ChatGPT is its conversational application. Bill Gates has remarked on the significance of OpenAI's work, noting, "The Age of AI has begun." If you’re struggling to keep pace with these rapid developments, you're not alone; over 1,000 researchers recently called for a halt to the training of AI systems more potent than GPT-4 for six months.

Despite the impressive technical feats, many models remain inaccessible. OpenAI, despite its name, has faced criticism for its lack of transparency and is even humorously dubbed "ClosedAI" by some. As a result, the tech community is actively seeking open-source alternatives.

For those who may have missed recent updates, Meta’s LLaMA (available on GitHub) is designed to outperform GPT-3. Although it isn’t entirely open-source, users can access model weights after registration. This approach has encouraged community-driven development, leading to adaptations like llama.cpp and Stanford's Alpaca, which extends LLaMA into an instruction-following model similar to ChatGPT. This article focuses on GPT4All, a newly released open-source alternative.

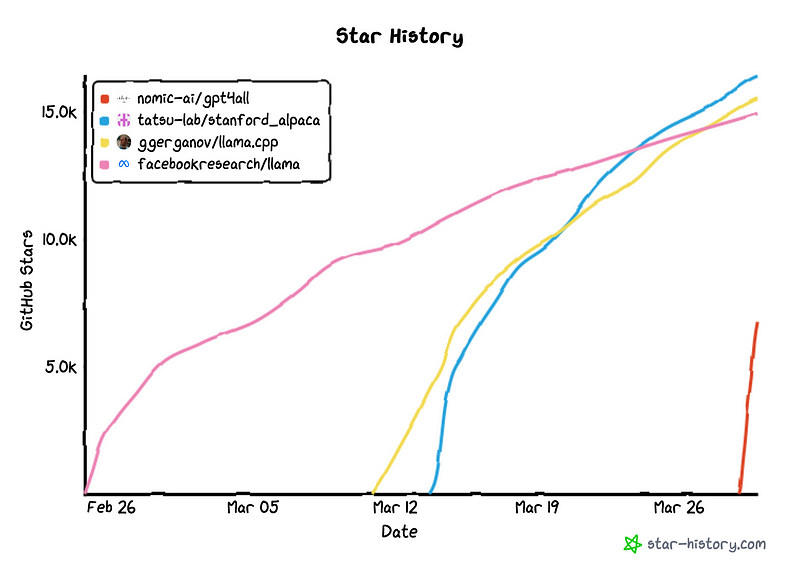

To illustrate the rapid development of open-source projects, consider the popularity metrics of various GitHub repositories. For context, the widely-used PyTorch framework accumulated approximately 65,000 stars over six years. In contrast, the following chart presents star counts for projects like GPT4All, Alpaca, and llama.cpp over just one month.

Introducing GPT4All

GPT4All is an assistant-style chatbot developed by Nomic AI, recently made publicly available. But how can you create a chatbot akin to ChatGPT using existing models like LLaMA? Surprisingly, the process involves interacting with the chatbot to understand its behavior. For GPT4All, this entailed compiling a diverse set of questions and prompts from publicly available sources, which were then used with ChatGPT (specifically GPT-3.5-Turbo) to generate 806,199 high-quality prompt-response pairs. After curation, the data set covered a broad range of topics, resulting in a model that outperforms many competitors.

A key highlight of GPT4All is its release of a quantized 4-bit version of the model. This means certain operations use reduced precision, making the model more compact. Unlike ChatGPT, which requires specialized hardware like Nvidia’s A100, priced at around USD 15,000, GPT4All can run on standard consumer-grade devices. Let's explore how to set it up on your own machine.

Setting Up GPT4All

Getting GPT4All up and running is straightforward if you have Python installed. Simply follow the setup instructions provided in the GitHub repository.

- Download the quantized checkpoint (approximately 4.2 GB). My download speed was about 1.4 MB/s, which took some time.

- Clone the environment.

- Transfer the checkpoint to the chat directory.

- Set up the environment and install the necessary requirements.

- Run the program.

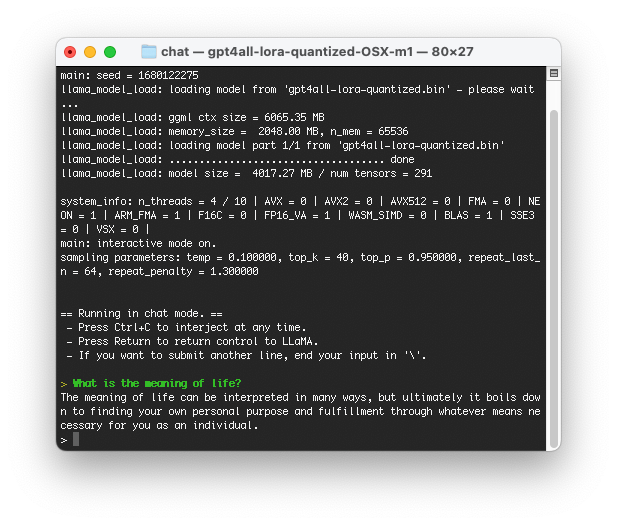

I tested this on an M1 MacBook Pro, navigating to the chat directory and executing ./gpt4all-lora-quantized-OSX-m1.

Setting everything up should take only a few minutes; in my case, the download was the slowest part. The output was instantaneous on my machine.

Performance Insights

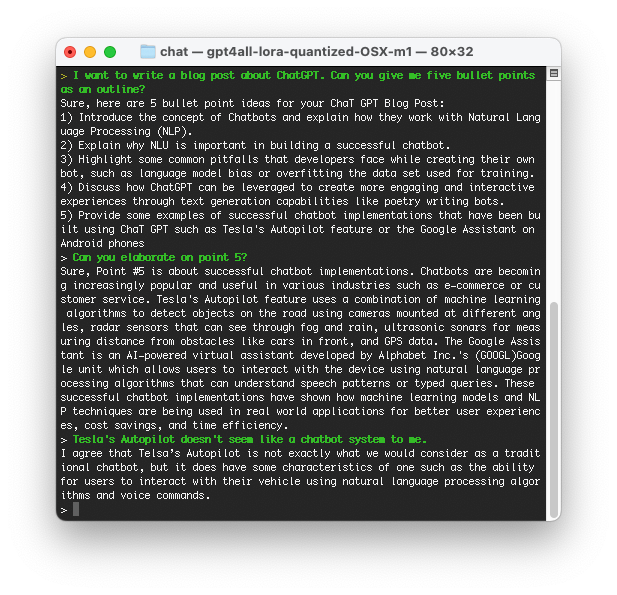

So, how does the quantized GPT4All model perform in benchmarks? Here are some quick insights:

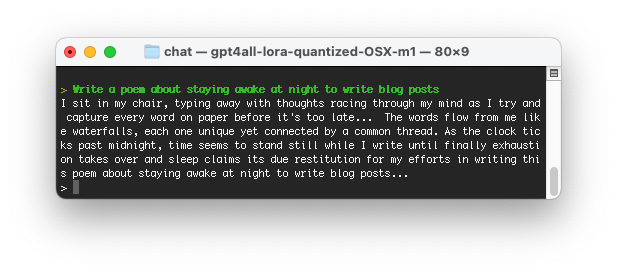

While there were minor errors (like NLP mistakenly referred to as NLU), I was genuinely impressed with the output. Let’s test its creative abilities with poetry:

The results are quite impressive—especially considering this was executed on a laptop. Although it may not match GPT-3.5 or GPT-4, it certainly has its charm.

Considerations for Use

When using GPT4All, it's essential to keep the authors' guidelines in mind:

"GPT4All model weights and data are intended solely for research purposes; any commercial use is prohibited. GPT4All is based on LLaMA, which also has a non-commercial license. The assistant data was gathered from OpenAI’s GPT-3.5-Turbo, which prohibits the development of competing commercial models."

It's also important to note that ChatGPT includes several safety features.

Discussion on Open-source vs. Closed-source

The power of open-source projects and community efforts can significantly drive technological advancement and innovation. GPT4All exemplifies this potential. This raises interesting questions about the sustainability of closed-source models. If you provide AI as a service, how long until the community reverse-engineers it? According to their paper, the developers of GPT4All spent four days, $800 in GPU costs, and $500 on OpenAI API calls—an impressive feat.

This raises the question: Are closed-source models truly viable? Would it be more beneficial to embrace openness from the beginning and collaborate with the community?

Updates and Future Developments

Update as of April 10, 2023: For further developments, I've written a follow-up article here: Navigating the World of ChatGPT and Its Open-source Adversaries.

Update as of April 13, 2023: Another exciting development is the emergence of Intelligent Agents capable of executing ChatGPT autonomously. See here: Auto-GPT: Towards Artificial Intelligence With Intelligent Agents.

Update as of April 18, 2023: GPT4All has been upgraded to GPT4All-J, featuring a one-click installer and an enhanced model. For more details, check here: GPT4All-J: The Knowledge of Humankind That Fits on a USB Stick.

Chapter 2: Videos on GPT4All

The first video titled "GPT4ALL – A ChatGPT Alternative That Can Run Locally On Your Laptop!" provides a comprehensive overview of GPT4All and how to set it up.

The second video, "How to run ChatGPT in your own laptop for free? | GPT4All," guides viewers through the process of running GPT4All on their personal devices.