A Comprehensive Overview of Temperature Measurement and Scales

Written on

The Evolution of Temperature Measurement

Temperature is a critical physical quantity, yet measuring it presents unique challenges. Establishing a temperature scale necessitates two distinct reference points, and a thermometer must be capable of covering the entire range of temperatures of interest. It wasn't until the 18th century that accurate calibration of thermometers became possible.

In this piece, we will examine how thermometers function, followed by an exploration of the three widely-used temperature scales: Celsius, Fahrenheit, and Kelvin. Lastly, we will investigate the extremes of temperature, including conditions near absolute zero and the most extreme temperatures found in the universe.

The Thermometer: An Instrument for Temperature Measurement

The quest to measure temperature dates back to ancient civilizations such as Greece, Egypt, and Byzantium, but it is Galileo Galilei who is credited with inventing the first modern thermometer. His thermoscope utilized the expansion of air in response to heat as an indicator of temperature.

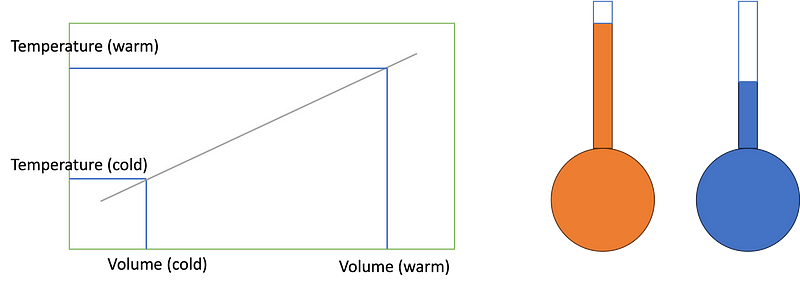

The fundamental operation of a thermometer can be illustrated as follows: a narrow tube contains a liquid, with a pocket of air at the top. As temperatures rise, the liquid expands and ascends within the tube (indicated in orange), while at lower temperatures, it contracts (shown in blue). By assuming a linear relationship between these reference points, a temperature scale can be created.

This principle was further developed with the mercury thermometer, invented by Daniel Gabriel Fahrenheit in the early 18th century. Mercury's expansion rate of 0.018% per degree makes it particularly effective for temperature measurements ranging from -38.9 °C to +356.7 °C. However, concerns over mercury's toxicity, especially in medical settings, have led to a shift towards electronic thermometers that utilize sensors sensitive to temperature changes.

Different contexts utilize three primary temperature scales. The Kelvin scale is favored in scientific communities, while the Celsius scale is used within the metric system, and the Fahrenheit scale is prevalent in the United States.

The Celsius Scale

The Celsius scale, named after Swedish astronomer Anders Celsius (1701–1744), is utilized in metric-system-following countries. Originating in the 18th century, it is defined by two reference points:

- The freezing point of water is set at 0 °C.

- The boiling point of water at sea level is set at 100 °C.

It is worth noting that while the freezing point of water is largely unaffected by pressure, the boiling point does change with atmospheric conditions. For instance, at higher altitudes, water boils at temperatures lower than 100 °C. For example, at Jungfraujoch, Switzerland's highest train station (3464 m above sea level), water boils at approximately 89 °C.

The Fahrenheit Scale

The Fahrenheit scale, established by Daniel Gabriel Fahrenheit (1686–1736), is primarily used in the United States. Originally, he defined 0 °F as the freezing point of a concentrated salt solution and 100 °F as the human body temperature. It was later redefined so that 32 °F represents the freezing point of water and 212 °F corresponds to its boiling point.

Although the Fahrenheit scale predates Celsius, Celsius has become the standard within the metric system. The conversion between the two scales is achieved through specific equations.

The Kelvin Scale: SI Units for Temperature

In the International System of Units (SI), thermodynamic temperature is one of the seven fundamental quantities. Most countries and scientific literature utilize SI units, measuring temperature in Kelvin (K), named in honor of Baron Kelvin (1824–1907), a physicist and engineer from the University of Glasgow.

The Kelvin scale is considered an absolute scale, with 0 K representing absolute zero, the state of minimum entropy in an ideal gas. At this point, only zero-point motion persists, reflecting the kinetic energy of the quantum ground state.

This absolute zero serves as a reference for converting between the Kelvin, Celsius, and Fahrenheit scales. For Celsius, 0 K is equal to -273.15 °C, and a temperature difference of 1 K corresponds to a change of 1 °C. The Rankine scale, which shares the same zero reference point and temperature differential as Fahrenheit, also exists.

Historically, the definition of the Kelvin was linked to the properties of liquid water, stating: “The kelvin, unit of thermodynamic temperature, is the fraction 1 / 273.16 of the thermodynamic temperature of the triple point of water.” With the most recent SI unit definitions, all fundamental physical quantities are derived from a set of seven SI defining constants, each with an exact numerical value.

Matter Near Absolute Zero

The universe is inherently cold, with cosmic microwave radiation measuring around 2.8 K. The coldest objects known are produced in laboratories, where scientists employ sophisticated laser techniques to cool atoms to temperatures near a few nano-Kelvin.

Is There an Upper Limit to Temperature?

While absolute zero is a defined threshold, one wonders if there exists an upper limit for temperature. The hottest temperatures in the universe occur within a star's core during its final stages. In supernova events, the core collapses and heats up to approximately 100 billion Kelvin.

Despite this, energy itself has no defined upper limit, leaving open the possibility that even hotter states of matter could exist beyond those observed in supernovae.

This video titled "Fahrenheit to Celsius: History of the Thermometer" offers a fascinating exploration of how temperature measurement has evolved over time.

In "Fahrenheit, Celsius, Kelvin: A Brief History of Three Important Temperature Scales," viewers can learn more about the significance of these temperature scales in science and everyday life.

References

- SI units at NIST

- Mercury thermometer

- Coldest temperature in the universe

- Hottest temperature in the universe