Understanding LLMs: Essential Insights for Developers — Part II

Written on

Chapter 1: Revisiting LLM Foundations

Welcome back! I trust you had the opportunity to review Part I of this series, where we explored two pivotal concepts of LLM architecture: Tokens and Embeddings.

Let’s proceed to delve into the topic of Parameters.

In the realm of machine learning and large language models (LLMs), parameters are crucial components that the model learns from the training data. They serve as the foundation for LLMs, enabling them to extract knowledge from data and execute intricate tasks.

Think of parameters as the internal configurations of a neural network that can be modified through training, thereby enhancing the model's ability to produce accurate predictions and outputs.

Parameters are primarily categorized into two types:

- Weights — The connections between neurons across various layers of the LLM. Weights dictate the degree of influence one neuron's output has on the activation of another neuron.

- Biases — These are adjustment factors typically added before neuron activation to shift the output, allowing for improved model performance.

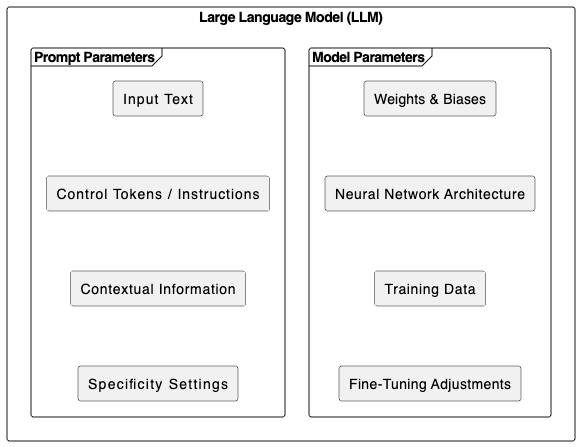

Another important distinction is made between Model Parameters and Prompt Parameters. Model Parameters can be utilized across different stages of LLM training, allowing for better capture of relationships and language structures.

- Context and Meaning — Parameters help LLMs recognize the relevance of words in a sentence to each other.

- Text Generation — They influence how a model constructs coherent sentences.

- Model Size — The number of parameters is often associated with the model's size, although a larger size does not inherently mean better performance.

- Fine-Tuning — Utilizing Bias Parameters can refine models for specific tasks.

Distinction Between Model and Prompt Parameters

Prompt Parameters pertain to the variables or elements included in the model's input:

- Input Text: The actual text of the prompt directs the model's expected output.

- Control Tokens or Instructions: These may encompass special tokens or structured guidelines that shape the model's response style, length, or content.

- Contextual Information: Any relevant background information in the prompt aids the model in generating a suitable response.

- Parameters for Specificity: In advanced applications, prompt parameters can include directives on how closely the model should adhere to known information versus creating new content.

Developer: Are we discussing Prompt Engineering here?

Absolutely! This leads us to our next significant topic: Prompting—the art of crafting specific inputs to elicit desired outputs from the model.

Prompts can vary in complexity, from simple questions to elaborate sets of instructions, including:

- Direct Instructions

- Question-Based Prompts

- Conversational Prompts

- Creative Prompting

- Zero-Shot or Few-Shot Learning

Prompt Engineering is the practice of designing and optimizing these prompting strategies.

#### Examples of Prompting Techniques

Direct Instruction

User: Analyze the following text for emotional tone and key themes, then summarize your findings: The Atlantic puffin (Fratercula arctica) is a seabird species in the auk family and is the only puffin native to the Atlantic Ocean...

LLM: The provided text offers a factual description of the Atlantic puffin, focusing on its characteristics and migration patterns. The tone is neutral and informative, with no personal opinions expressed.

Key themes include:

- Species Identification

- Physical Description

- Habitat and Behavior

Question-Based

User: Can you explain how blockchain technology works and its implications for the financial industry?

This style encourages the model to provide relevant insights into blockchain technology and its applications in finance.

Conversational

User: I’m contemplating a career switch but am uncertain about the associated risks. What should I take into account before making a decision, and how can I prepare for this transition?

This prompt invites a nuanced dialogue, asking the model for thoughtful advice on a complex personal choice.

Creative Prompting

User: Write a story set in a dystopian future where humans coexist with advanced AI, focusing on a human character who uncovers a hidden truth about AI that alters their understanding of reality.

This prompting style encourages the model to create an imaginative narrative based on the provided elements.

Zero-Shot Learning

User: Explain the principle behind solar panels and their role in sustainable energy, along with the challenges in their widespread use.

The model responds by providing information on solar panels without prior examples, relying solely on its pre-trained knowledge.

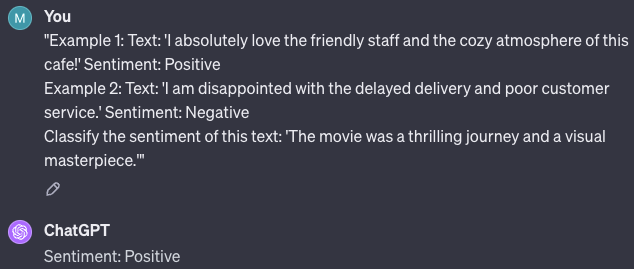

Few-Shot Prompting

This technique involves providing several examples followed by a new question, prompting the model to respond in a similar format and level of detail.

Developer: So far, we’ve discussed Tokenization, Embeddings, Parameters, and Prompting. Is there anything else to cover?

Yes, the final piece to understand is Fine-Tuning, which involves refining a pre-trained model with a specific dataset to enhance its capabilities.

Key Aspects of Fine-Tuning

- Pre-Trained Models: The process begins with a model trained on a broad dataset, equipping it with general knowledge.

- Specialized Dataset: The model is then further trained on a dataset specific to the intended task or domain, such as medical texts for healthcare applications.

- Adjusting Parameters: Fine-tuning involves slight adjustments to the model's parameters to align with the new data.

- Reduced Training Time: Since the model starts pre-trained, fine-tuning is usually quicker and requires less data than building a model from scratch.

- Task-Specific Performance: The aim is to improve the model's accuracy and relevance for a particular task.

An excellent illustration of Fine-Tuning is the custom GPTs from OpenAI, which are tailored versions of ChatGPT designed for specific purposes.

Developer: This is an excellent overview. Is there anything more to add?

That covers the essentials. For developers to thrive in today’s tech landscape, a strong understanding of LLM models and the intricacies of prompting and fine-tuning is vital.

Bear in mind, these models are not merely tools for the present but foundational elements for a future where human-machine interactions become increasingly sophisticated and beneficial.

Thanks for your time!

The first video titled "[1hr Talk] Intro to Large Language Models" delves into the fundamental concepts of LLMs, explaining their architecture and real-world applications.

The second video, "Development with Large Language Models Tutorial – OpenAI, Langchain, Agents, Chroma," offers a comprehensive guide on building applications utilizing LLMs, showcasing various tools and methodologies.