# Unlocking Local Access to Large Language Models with LM Studio

Written on

Chapter 1: Introduction to LM Studio

In the fast-evolving realm of artificial intelligence, high-performance language models have typically been accessible only to those with substantial computational capabilities and technical know-how. The emergence of LM Studio, however, is changing this narrative, providing a user-friendly interface for engaging with various large language models (LLMs), such as the innovative Gemma series from Google.

Bridging the Divide with LM Studio

LM Studio serves as a vital resource for those eager to explore open-source LLMs right from their personal computers. While it isn't open-source itself, LM Studio utilizes the llama.cpp library to accommodate a broad spectrum of models compatible with the ggml tensor library. This versatile tool is designed for both Windows and Mac users, with a Linux version in development, requiring only 16 GB of RAM to function effectively.

Its user-centric design simplifies the process of downloading, activating, and deploying models like Zephyr 7B and Codellama Instruct 7B from the Hugging Face Hub, even offline. This is further enhanced by features like Local Inference Servers, which offer API access for seamless integration with other applications, resembling the OpenAI API format for easy implementation.

Gemma: A Revolutionary Small-Sized LLM

Gemma signifies a significant advancement in the accessibility of high-performance AI models. It comes in versions suitable for deployment on GPU, TPU, CPU, and even mobile devices, excelling in various language comprehension, reasoning, and safety evaluations. In contrast to its larger counterparts, Gemma includes a 7 billion parameter model and a more compact 2 billion parameter variant, both designed for efficiency, allowing deployment in more constrained settings.

Testing Gemma's Capabilities in LM Studio

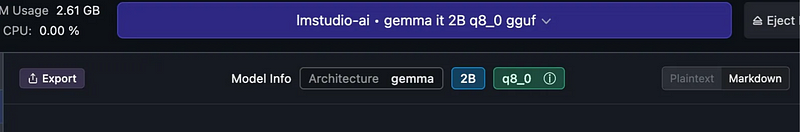

The inclusion of Gemma within LM Studio offers an effective means to evaluate its capabilities. Experiments using the quantized version of the Gemma 2B model reveal its strengths in language comprehension, commonsense reasoning, and question answering, among other areas. These assessments not only showcase Gemma’s solid performance across diverse domains but also highlight the potential of smaller LLMs in delivering advanced AI features with lower computational requirements.

Conclusion: Promoting AI Exploration

With its support for Gemma and other models, LM Studio exemplifies the shifting dynamics in AI research and development. By reducing the hurdles for experimenting with LLMs, LM Studio serves not just as a tool but as a catalyst for innovation, empowering enthusiasts, developers, and researchers to push the boundaries of AI technology. Whether for academic purposes, application development, or personal exploration, LM Studio provides a flexible and robust platform for delving into the universe of large language models, making the next generation of AI more reachable than ever before.

Chapter 2: Practical Tutorials for LM Studio

This tutorial demonstrates how to run any open-source LLM model locally using LM Studio, guiding users step-by-step through the process.

In this no-code tutorial, learn how to run open-source LLMs locally with LM Studio, making it accessible for everyone, regardless of technical skill level.